Elon Musk has done it again—pushed the boundaries of what once seemed impossible, and turned bold sci-fi dreams into real-world technology. His brain chip, developed by Neuralink, is already changing lives. Just ask Noland Arbaugh, a man paralyzed from the shoulders down, who now plays online chess and video games using only his mind.

The chip, implanted directly into the brain, interprets neural signals and turns them into computer commands. It’s innovation at its most powerful.

But for all its promise, the Neuralink brain chip is also stirring waves of anxiety. The same awe that surrounds its success also gives rise to questions, doubts, and fears. While some call it a miracle, others call it a potential Pandora’s box.

Because no matter how revolutionary the technology is, many are still asking: what price do we pay when we invite machines into our minds?

The Neuralink chip, known as “The Link,” has demonstrated remarkable capabilities in its early trials. Volunteers like Arbaugh have been able to move cursors, control computers, and interact with digital environments using nothing but thought.

This level of control is being compared to “using the Force,” a nod to Star Wars, and it’s an apt metaphor: what once was fantasy is now at our fingertips—literally and mentally.

But even as Elon Musk touts this as a first step toward a human-AI “symbiosis,” critics warn that the implications go far beyond convenience or mobility. Implanting a chip that directly links the brain to machines is no small matter—it’s an invasive and intimate connection that touches on everything from autonomy to privacy.

One of the biggest concerns about Neuralink’s brain chip is control—specifically, who holds it. While the device may empower patients, it also introduces new vulnerabilities. The chip collects neural data—your thoughts, your intentions, your commands.

That data is sent wirelessly to external devices running Neuralink software. But what happens to that data once it leaves your brain?

Some fear it could be exploited. Imagine a future where brain data could be accessed, intercepted, or even manipulated. If we can decode someone’s intent to move a cursor, could we eventually decode emotions, desires, or secrets? Where is the line between helpful technology and a surveillance tool?

It’s not paranoia—it’s a real discussion about ethics, ownership, and security. People are right to ask: once our thoughts become digital signals, who protects them?

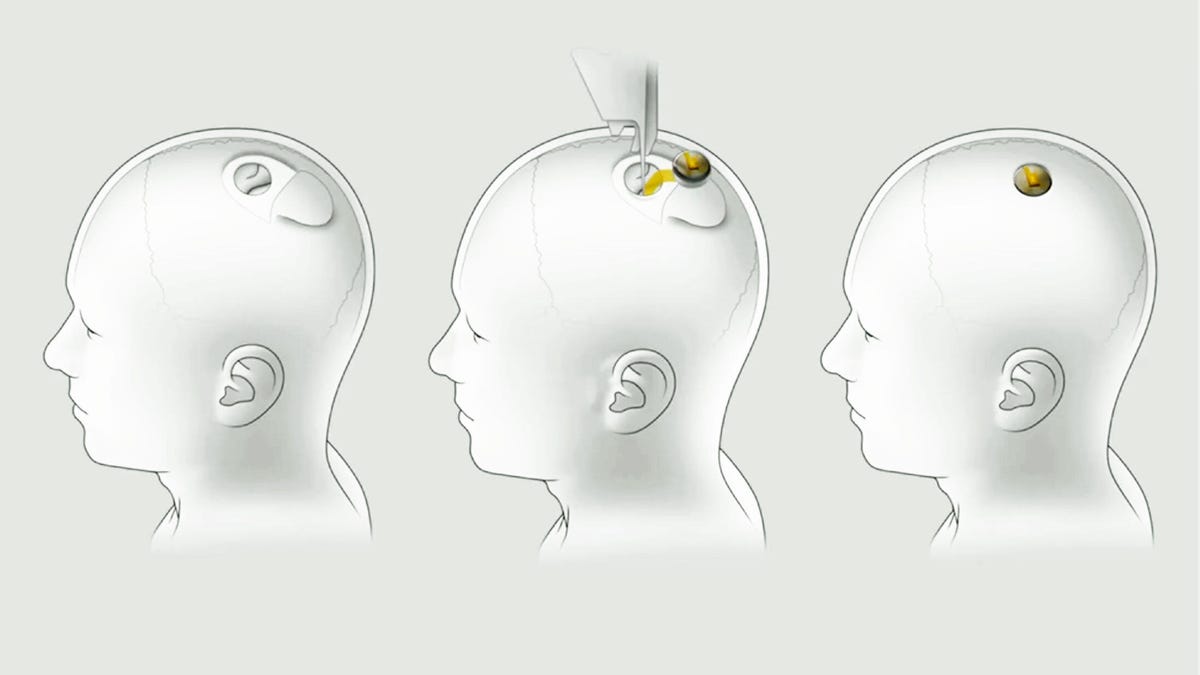

There’s also the matter of permanence. Once the chip is implanted, it becomes part of your biology. It requires a highly specialized robot to insert the device into the motor cortex of the brain using ultra-thin threads—too fine for human hands.

The surgery takes several hours and involves placing the chip in the very core of how we move and interact with the world.

But what happens if the device fails? What if the software malfunctions?

Noland Arbaugh himself experienced a brief period when the chip stopped working—he lost all control of the computer and feared he might never regain access. While Neuralink’s engineers resolved the issue and improved the system, it highlights a crucial point: this is not plug-and-play technology. It’s deeply embedded in the most complex organ of the human body.

If Neuralink were to cease operations, or if support for the device vanished, what would happen to those with chips in their heads? Could they be left stranded, unable to access their digital tools, or worse, suffering from unfixable bugs lodged inside their brains?

Beyond technical concerns, there’s the question of psychological impact. How does it feel to know a machine is inside your mind? For early adopters, the thrill of regaining mobility or autonomy may overshadow any unease.

But as the technology becomes more common, some fear a divide may grow between those who are enhanced and those who are not.

Will society begin to judge people based on their cognitive upgrades? Will brain chips become the new standard for communication, work, or entertainment? There’s a slippery slope here—between medical necessity and enhancement for profit or prestige.

And then there’s the philosophical dilemma. If thoughts can be captured, recorded, and analyzed, does it change the way we think?

Knowing your brain activity might be tracked could alter behavior, stifle spontaneity, or make people more self-conscious. Privacy, once thought of in terms of location or identity, now becomes an issue of internal experience.

Another looming concern is the lack of regulation. Neuralink’s clinical trials are FDA-approved, but that doesn’t mean the ethical questions have been answered. As the technology progresses, how do we ensure it’s used responsibly? Who sets the rules for what brain data can be collected, stored, or shared?

There is no established global standard for brain-machine interfaces. What’s legal in one country may be prohibited in another. This creates a vacuum of accountability—and that’s dangerous when dealing with something as personal as the mind.

As Neuralink begins trials in new cities like Miami and expands its reach, pressure is mounting for governments, medical boards, and ethical committees to catch up. Because once these devices become widespread, reining them in will be much harder.

To be clear, no one is arguing against progress. The benefits for people with paralysis, ALS, or severe neurological conditions are undeniable.

Giving someone the ability to control technology with their thoughts is extraordinary. It’s not a small achievement—it’s a life-changing one.

But every leap forward brings risks. And while Musk’s vision of merging human brains with AI may sound exhilarating to some, it alarms others.

Because at its core, this isn’t just a story about chips and wires—it’s about how much of ourselves we’re willing to give up in the name of advancement.

Neuralink’s brain chip is both a medical marvel and a societal wake-up call. It shows us what’s possible, but also forces us to confront what’s at stake. The technology is here.

The power is real. But with that power comes responsibility—not just for the creators, but for all of us.

Despite its early success, Neuralink’s brain chip sits at the center of a growing debate. On one side are the lives it’s helping to transform, like Noland Arbaugh’s. On the other are the concerns of privacy, control, and ethics that can’t be ignored.

For every person excited by the possibilities, there’s another worried about the consequences. And that balance—between wonder and worry—will shape how we move forward with technology that reaches into the very heart of who we are.

News

“I Still Miss Him”: Dolly Parton Breaks Down Mid Song as Reba McEntire Joins Her for Heart Shattering Tribute to Late Husband Carl Dean.

“I Still Miss Him”: Dolly Parton Breaks Down Mid Song as Reba McEntire Joins Her for Heart Shattering Tribute to…

This guitar carried my soul on its strings when no one knew my name…

In the electric silence that follows a singer’s last note, when the world holds its breath in anticipation of what’s…

The Voice reveals 4 returning coaches for season 28 including Niall Horan and Reba McEntire

The duo will be joined by fellow show alums Michael Bublé and Snoop Dogg. Nial Horan and Reba McEntire on…

Reba McEntire: “Drag Queens Don’t Belong Around Our Kids”

Reba McEntire Sparks Controversy with Statement on Drag Queens and Children. Country music legend Reba McEntire has found herself at…

Reba, Miranda Lambert, & Lainey Wilson Debut Powerful New Song, “Trailblazer,” At The ACM Awards

“Trailblazer” Is A New Song That Celebrates The Influential Women Of Country Music’s Past And Present Reba McEntire, Miranda Lambert, and Lainey…

Jennifer Aniston made a surprise appearance with a dazed expression and an unresolved…

Jennifer Aniston is no stranger to the public eye. For decades, she’s been one of the most beloved faces in…

End of content

No more pages to load